O.S. MIDSEM by god

copy paste from diff. source

by Chetan yadav {god}

download link > Download

1 >>Components of Operating System

There are various

components of an Operating System to perform well defined tasks. Though most of

the Operating Systems differ in structure but logically they have similar

components. Each component must be a well-defined portion of a system that

appropriately describes the functions, inputs, and outputs.

There are following

8-components of an Operating System:

1.

Process Management

2.

I/O Device Management

3.

File Management

4.

Network Management

5.

Main Memory Management

6.

Secondary Storage

Management

7.

Security Management

8.

Command Interpreter

System

Following section

explains all the above components in more detail:

Process Management

A process is program

or a fraction of a program that is loaded in main memory. A process needs

certain resources including CPU time, Memory, Files, and I/O devices to

accomplish its task. The process management component manages the multiple

processes running simultaneously on the Operating System.

A program in running state is called a process.

The operating system

is responsible for the following activities in connection with process

management:

- Create, load, execute, suspend, resume, and terminate

processes.

- Switch system among multiple processes in main memory.

- Provides communication mechanisms so that processes can

communicate with each others

- Provides synchronization mechanisms to control

concurrent access to shared data to keep shared data consistent.

- Allocate/de-allocate resources properly to prevent or

avoid deadlock situation.

I/O Device Management

One of the purposes of

an operating system is to hide the peculiarities of specific hardware devices

from the user. I/O Device Management provides an abstract level of H/W devices

and keep the details from applications to ensure proper use of devices, to

prevent errors, and to provide users with convenient and efficient programming

environment.

Following are the

tasks of I/O Device Management component:

- Hide the details of H/W devices

- Manage main memory for the devices using cache, buffer,

and spooling

- Maintain and provide custom drivers for each device.

File Management

File management is one

of the most visible services of an operating system. Computers can store

information in several different physical forms; magnetic tape, disk, and drum

are the most common forms.

A file is defined as a

set of correlated information and it is defined by the creator of the file.

Mostly files represent data, source and object forms, and programs. Data files

can be of any type like alphabetic, numeric, and alphanumeric.

A files is a sequence of bits, bytes, lines or

records whose meaning is defined by its creator and user.

The operating system

implements the abstract concept of the file by managing mass storage device,

such as types and disks. Also files are normally organized into directories to

ease their use. These directories may contain files and other directories and

so on.

The operating system

is responsible for the following activities in connection with file management:

- File creation and deletion

- Directory creation and deletion

- The support of primitives for manipulating files and

directories

- Mapping files onto secondary storage

- File backup on stable (nonvolatile) storage media

Network Management

The definition of

network management is often broad, as network management involves several

different components. Network management is the process of managing and

administering a computer network. A computer network is a collection of various

types of computers connected with each other.

Network management comprises

fault analysis, maintaining the quality of service, provisioning of networks,

and performance management.

Network management is the process of keeping

your network healthy for an efficient communication between different

computers.

Following are the

features of network management:

- Network administration

- Network maintenance

- Network operation

- Network provisioning

- Network security

Main Memory Management

Memory is a large

array of words or bytes, each with its own address. It is a repository of

quickly accessible data shared by the CPU and I/O devices.

Main memory is a

volatile storage device which means it loses its contents in the case of system

failure or as soon as system power goes down.

The main motivation behind Memory Management

is to maximize memory utilization on the computer system.

The operating system

is responsible for the following activities in connections with memory

management:

- Keep track of which parts of memory are currently being

used and by whom.

- Decide which processes to load when memory space

becomes available.

- Allocate and deallocate memory space as needed.

Secondary Storage Management

The main purpose of a

computer system is to execute programs. These programs, together with the data

they access, must be in main memory during execution. Since the main memory is

too small to permanently accommodate all data and program, the computer system

must provide secondary storage to backup main memory.

Most modern computer

systems use disks as the principle on-line storage medium, for both programs

and data. Most programs, like compilers, assemblers, sort routines, editors,

formatters, and so on, are stored on the disk until loaded into memory, and

then use the disk as both the source and destination of their processing.

The operating system

is responsible for the following activities in connection with disk management:

- Free space management

- Storage allocation

Disk scheduling

Security Management

The operating system

is primarily responsible for all task and activities happen in the computer system.

The various processes in an operating system must be protected from each

other’s activities. For that purpose, various mechanisms which can be used to

ensure that the files, memory segment, cpu and other resources can be operated

on only by those processes that have gained proper authorization from the

operating system.Security Management refers to a mechanism for controlling the

access of programs, processes, or users to the resources defined by a computer

controls to be imposed, together with some means of enforcement.

Command Interpreter System

One of the most

important component of an operating system is its command interpreter. The

command interpreter is the primary interface between the user and the rest of

the system.Command Interpreter System executes a user command by calling one or

more number of underlying system programs or system calls.Command Interpreter

System allows human users to interact with the Operating System and provides

convenient programming environment to the users.

Many commands are

given to the operating system by control statements. A program which reads and

interprets control statements is automatically executed. This program is called

the shell and few examples are Windows DOS command window, Bash of Unix/Linux

or C-Shell of Unix/Linux.

Other Important Activities

An Operating System is

a complex Software System. Apart from the above mentioned components and

responsibilities, there are many other activities performed by the Operating

System. Few of them are listed below:

- Security −

By means of password and similar other techniques, it prevents

unauthorized access to programs and data.

- Control over system performance − Recording delays between request for a service

and response from the system.

- Job accounting −

Keeping track of time and resources used by various jobs and users.

- Error detecting aids −

Production of dumps, traces, error messages, and other debugging and error

detecting aids.

- Coordination between other softwares and users − Coordination and assignment of compilers,

interpreters, assemblers and other software to the various users of the

computer systems.

Types of O.S.

or here

STEps of process

1. New

A program which is going to be picked up by the OS into the main

memory is called a new process.

2. Ready

Whenever a process is created, it directly enters in the ready

state, in which, it waits for the CPU to be assigned. The OS picks the new

processes from the secondary memory and put all of them in the main memory.

The processes which are ready for the execution and reside in

the main memory are called ready state processes. There can be many processes

present in the ready state.

3. Running

One of the processes from the ready state will be chosen by the

OS depending upon the scheduling algorithm. Hence, if we have only one CPU in

our system, the number of running processes for a particular time will always

be one. If we have n processors in the system then we can have n processes

running simultaneously.

4. Block or wait

From the Running state, a process can make the transition to the

block or wait state depending upon the scheduling algorithm or the intrinsic

behavior of the process.

When a process waits for a certain resource to be assigned or

for the input from the user then the OS move this process to the block or wait

state and assigns the CPU to the other processes.

5. Completion or termination

When a process finishes its execution, it comes in the

termination state. All the context of the process (Process Control Block) will

also be deleted the process will be terminated by the Operating system.

6. Suspend ready

A process in the ready state, which is moved to secondary memory

from the main memory due to lack of the resources (mainly primary memory) is

called in the suspend ready state.

If the main memory is full and a higher priority process comes

for the execution then the OS have to make the room for the process in the main

memory by throwing the lower priority process out into the secondary memory.

The suspend ready processes remain in the secondary memory until the main

memory gets available.

7. Suspend wait

Instead of removing the process from the ready queue, it's

better to remove the blocked process which is waiting for some resources in the

main memory. Since it is already waiting for some resource to get available

hence it is better if it waits in the secondary memory and make room for the

higher priority process. These processes complete their execution once the main

memory gets available and their wait is finished.

A Process Scheduler

CHETAN YADAV

A Process Scheduler schedules different processes to be assigned

to the CPU based on particular scheduling algorithms. There are six popular

process scheduling algorithms which we are going to discuss in this chapter −

·

First-Come, First-Served

(FCFS) Scheduling

·

Shortest-Job-Next (SJN)

Scheduling

·

Priority Scheduling

·

Shortest Remaining Time

·

Round Robin(RR)

Scheduling

·

Multiple-Level Queues

Scheduling

These algorithms are either non-preemptive or preemptive.

Non-preemptive algorithms are designed so that once a process enters the

running state, it cannot be preempted until it completes its allotted time,

whereas the preemptive scheduling is based on priority where a scheduler may

preempt a low priority running process anytime when a high priority process

enters into a ready state.

First Come First Serve (FCFS)

·

Jobs are executed on

first come, first serve basis.

·

It is a non-preemptive,

pre-emptive scheduling algorithm.

·

Easy to understand and

implement.

·

Its implementation is

based on FIFO queue.

·

Poor in performance as

average wait time is high.

Wait time of each process is as follows −

|

Process |

Wait Time : Service Time - Arrival Time |

|

P0 |

0 - 0 = 0 |

|

P1 |

5 - 1 = 4 |

|

P2 |

8 - 2 = 6 |

|

P3 |

16 - 3 = 13 |

Average Wait Time: (0+4+6+13) / 4 = 5.75

Shortest Job Next (SJN)

·

This is also known

as shortest job first, or SJF

·

This is a

non-preemptive, pre-emptive scheduling algorithm.

·

Best approach to

minimize waiting time.

·

Easy to implement in

Batch systems where required CPU time is known in advance.

·

Impossible to implement

in interactive systems where required CPU time is not known.

·

The processer should

know in advance how much time process will take.

Given: Table of processes, and their Arrival time, Execution time

|

Process |

Arrival Time |

Execution Time |

Service Time |

|

P0 |

0 |

5 |

0 |

|

P1 |

1 |

3 |

5 |

|

P2 |

2 |

8 |

14 |

|

P3 |

3 |

6 |

8 |

Waiting time of each process is as follows −

|

Process |

Waiting Time |

|

P0 |

0 - 0 = 0 |

|

P1 |

5 - 1 = 4 |

|

P2 |

14 - 2 = 12 |

|

P3 |

8 - 3 = 5 |

Average Wait Time: (0 + 4 + 12 + 5)/4 = 21 / 4 = 5.25

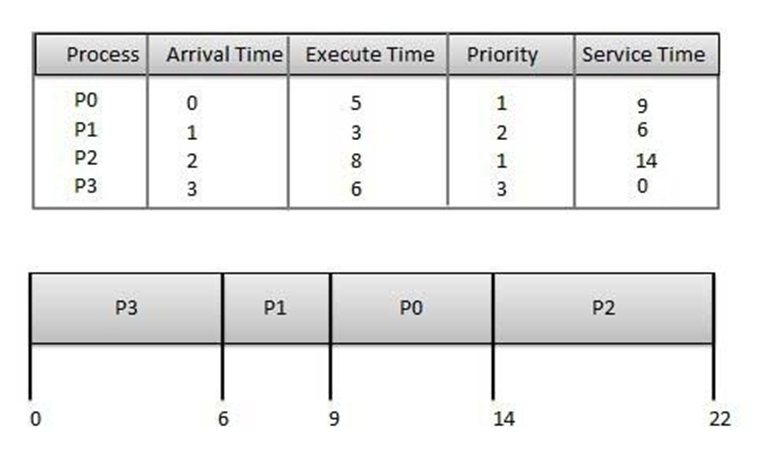

Priority Based Scheduling

·

Priority scheduling is a

non-preemptive algorithm and one of the most common scheduling algorithms in

batch systems.

·

Each process is assigned

a priority. Process with highest priority is to be executed first and so on.

·

Processes with same

priority are executed on first come first served basis.

·

Priority can be decided

based on memory requirements, time requirements or any other resource

requirement.

Given: Table of processes, and their Arrival time, Execution time,

and priority. Here we are considering 1 is the lowest priority.

|

Process |

Arrival Time |

Execution Time |

Priority |

Service Time |

|

P0 |

0 |

5 |

1 |

0 |

|

P1 |

1 |

3 |

2 |

11 |

|

P2 |

2 |

8 |

1 |

14 |

|

P3 |

3 |

6 |

3 |

5 |

Waiting time of each process is as follows −

|

Process |

Waiting Time |

|

P0 |

0 - 0 = 0 |

|

P1 |

11 - 1 = 10 |

|

P2 |

14 - 2 = 12 |

|

P3 |

5 - 3 = 2 |

Average Wait Time: (0 + 10 + 12 + 2)/4 = 24 / 4 = 6

Shortest Remaining Time

·

Shortest remaining time

(SRT) is the preemptive version of the SJN algorithm.

·

The processor is

allocated to the job closest to completion but it can be preempted by a newer ready

job with shorter time to completion.

·

Impossible to implement

in interactive systems where required CPU time is not known.

·

It is often used in

batch environments where short jobs need to give preference.

Round Robin Scheduling

·

Round Robin is the preemptive

process scheduling algorithm.

·

Each process is provided

a fix time to execute, it is called a quantum.

·

Once a process is

executed for a given time period, it is preempted and other process executes

for a given time period.

·

Context switching is used

to save states of preempted processes.

Wait time of each process is as follows −

|

Process |

Wait Time : Service Time - Arrival Time |

|

P0 |

(0 - 0) + (12 - 3) = 9 |

|

P1 |

(3 - 1) = 2 |

|

P2 |

(6 - 2) + (14 - 9) + (20 - 17) = 12 |

|

P3 |

(9 - 3) + (17 - 12) = 11 |

Average Wait Time: (9+2+12+11) / 4 = 8.5

Multiple-Level Queues Scheduling

Multiple-level queues are not an independent scheduling algorithm.

They make use of other existing algorithms to group and schedule jobs with

common characteristics.

·

Multiple queues are maintained

for processes with common characteristics.

·

Each queue can have its

own scheduling algorithms.

·

Priorities are assigned

to each queue.

For example, CPU-bound jobs can be scheduled in one queue and all

I/O-bound jobs in another queue. The Process Scheduler then alternately selects

jobs from each queue and assigns them to the CPU based on the algorithm

assigned to the queue.

fragmentation //Contiguous memory allocation// Demand Paging //What is a Page Fault?//

Swapping

Contiguous memory allocation is a method of allocating memory where a

single block of memory is set aside for a particular purpose, such as storing

data for an array. In this type of allocation, the memory locations for the

block of memory are adjacent to each other, hence the name "contiguous."

Contiguous memory allocation is often used when a large block of

memory is needed for a particular purpose, such as when storing a large array.

One advantage of this type of allocation is that it can be accessed

efficiently, as the memory locations are adjacent to each other. However, it

can be more difficult to manage than non-contiguous allocation, as it requires

more careful planning to ensure that there is enough contiguous memory

available for the desired purpose.

The

conditions of fragmentation depend on the memory allocation system. As the

process is loaded and unloaded from memory, these areas are fragmented into

small pieces of memory that cannot be allocated to incoming processes. It is

called fragmentation.

Swapping is a process of swapping a process

temporarily to a secondary memory from the main memory which is fast than

compared to secondary memory. But as RAM is of less size so the process that is inactive is

transferred to secondary memory.

Demand Paging

there is a

concept called Demand Paging is introduced. It suggests keeping all pages of

the frames in the secondary memory until they are required. In other words, it

says that do not load any page in the main memory until it is required.

What is a Page Fault?

If the referred page is not present in the

main memory then there will be a miss and the concept is called Page miss or

page fault.

The CPU has to access the missed page from the

secondary memory. If the number of page fault is very high then the effective

access time of the system will become very high.

Deadlock in OS | Scaler Topics

Chetan Yadav

What is Deadlock in OS?

All the processes in a

system require some resources such as central processing unit(CPU), file

storage, input/output devices, etc to execute it. Once the execution is

finished, the process releases the resource it was holding. However, when many

processes run on a system they also compete for these resources they require

for execution. This may arise a deadlock situation.

A deadlock is a situation in which more than one

process is blocked because it is holding a resource and also requires some

resource that is acquired by some other process. Therefore, none of the

processes gets executed.

Neccessary Conditions for Deadlock

The four necessary

conditions for a deadlock to arise are as follows.

- Mutual Exclusion: Only one process can use a resource at any given time

i.e. the resources are non-sharable.

- Hold and wait: A process is holding at least one resource at a time

and is waiting to acquire other resources held by some other process.

- No preemption: The resource can be released by a process voluntarily

i.e. after execution of the process.

- Circular Wait: A set of processes are waiting for each other in a

circular fashion. For example, lets say there are a set of processes

{P_0P0,P_1P1,P_2P2,P_3P3} such that P_0P0 depends

on P_1P1, P_1P1 depends

on P_2P2, P_2P2 depends

on P_3P3 and P_3P3 depends on P_0P0. This

creates a circular relation between all these processes and they have to

wait forever to be executed.

Example

Methods of Handling Deadlocks in Operating System

The first two methods

are used to ensure the system never enters a deadlock.

Deadlock Prevention

This is done by

restraining the ways a request can be made. Since deadlock occurs when all the

above four conditions are met, we try to prevent any one of them, thus

preventing a deadlock.

Deadlock Avoidance

When a process

requests a resource, the deadlock avoidance algorithm examines the

resource-allocation state. If allocating that resource sends the system into an

unsafe state, the request is not granted.

Therefore, it requires

additional information such as how many resources of each type is required by a

process. If the system enters into an unsafe state, it has to take a step back

to avoid deadlock.

Deadlock Detection and Recovery

We let the system fall

into a deadlock and if it happens, we detect it using a detection algorithm and

try to recover.

Some ways of recovery are as follows.

- Aborting all the deadlocked processes.

- Abort one process at a time until the system recovers

from the deadlock.

- Resource Preemption: Resources are taken one by one

from a process and assigned to higher priority processes until the

deadlock is resolved.

Methods of Handling Deadlocks in Operating System

The first two methods

are used to ensure the system never enters a deadlock.

Deadlock Prevention

This is done by

restraining the ways a request can be made. Since deadlock occurs when all the

above four conditions are met, we try to prevent any one of them, thus

preventing a deadlock.

Deadlock Avoidance

When a process

requests a resource, the deadlock avoidance algorithm examines the

resource-allocation state. If allocating that resource sends the system into an

unsafe state, the request is not granted.

Therefore, it requires

additional information such as how many resources of each type is required by a

process. If the system enters into an unsafe state, it has to take a step back

to avoid deadlock.

Deadlock Detection and Recovery

We let the system fall

into a deadlock and if it happens, we detect it using a detection algorithm and

try to recover.

Some ways of recovery are as follows.

- Aborting all the deadlocked processes.

- Abort one process at a time until the system recovers

from the deadlock.

- Resource Preemption: Resources are taken one by one

from a process and assigned to higher priority processes until the

deadlock is resolved.

Methods of Handling Deadlocks in Operating System

The first two methods

are used to ensure the system never enters a deadlock.

Deadlock Prevention

This is done by

restraining the ways a request can be made. Since deadlock occurs when all the

above four conditions are met, we try to prevent any one of them, thus

preventing a deadlock.

Deadlock Avoidance

When a process

requests a resource, the deadlock avoidance algorithm examines the

resource-allocation state. If allocating that resource sends the system into an

unsafe state, the request is not granted.

Therefore, it requires

additional information such as how many resources of each type is required by a

process. If the system enters into an unsafe state, it has to take a step back

to avoid deadlock.

Deadlock Detection and Recovery

We let the system fall

into a deadlock and if it happens, we detect it using a detection algorithm and

try to recover.

Some ways of recovery are as follows.

- Aborting all the deadlocked processes.

- Abort one process at a time until the system recovers

from the deadlock.

- Resource Preemption: Resources are taken one by one

from a process and assigned to higher priority processes until the

deadlock is resolved.

Comments

Post a Comment